The Business Case for Digital Transformation

Unlock New Revenue Streams. Innovate Product Offerings.

Build Resilience Against Market Disruptions.

There are numerous white papers and surveys indicating that most CEOs believe data-driven transformation is crucial to their companies’ futures. Furthermore, the popularity of generative AI (ChatGPT, LLM, ML) allows us to believe that this technology is within practical reach. At the very least, manufacturers know they need to start investing in generative AI technologies in order to stay competitive.

The end-goal is that embedded AI and Machine Learning models will fuel predictive analytics and proactive decision-making—optimizing operations and maximizing quality, production, and yield.

We see and hear that companies do not have enough visibility into plant operations. For strategic planning and flexibility, a company needs to know the current state of its operations. Digital transformation empowers companies to optimize operations, enhance decision-making, and improve customer experiences through data-driven insights and automation. It enables companies to stay competitive in rapidly evolving markets by reducing costs, increasing efficiency, and accelerating time-to-market for new products and services. By leveraging advanced technologies, like AI and Machine Learning, businesses can unlock new revenue streams, innovate their product offerings, and build resilience against market disruptions.

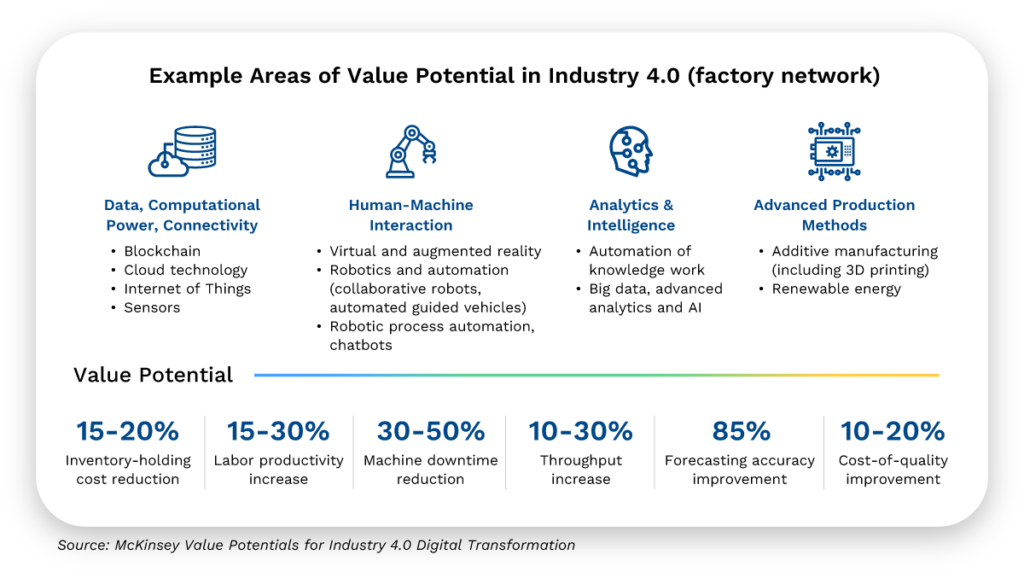

Digital Transformation can unlock significant value across the entire supply chain and throughout the factory network.

Where to Start Your Digital Transformation Investments

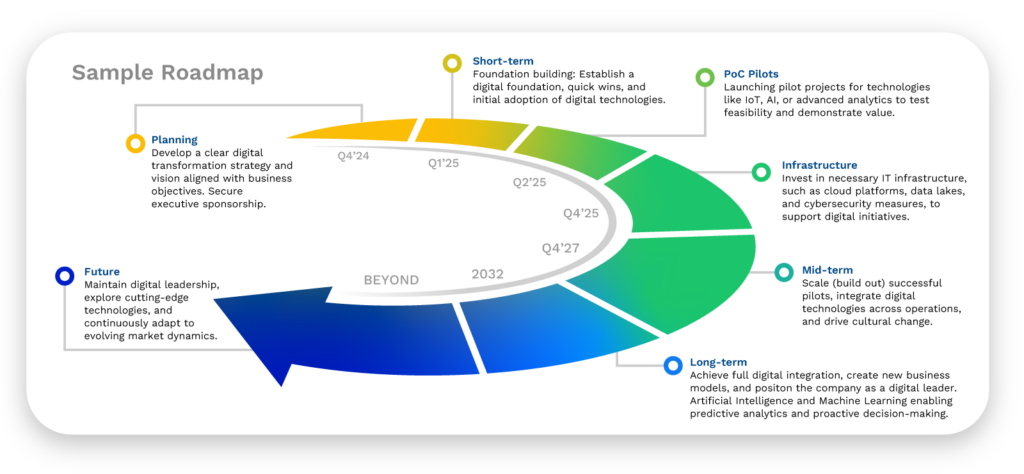

Your first actions should be to invest in a clear strategy and vision aligned with your business and to secure executive sponsorship. Companies need a digital strategy to provide a clear roadmap for leveraging technology to achieve their business goals, ensuring alignment between digital initiatives and overall company objectives.

A well-defined digital strategy helps businesses navigate the complexities of digital transformation, prioritize investments, and address potential challenges like data security, integration, and change management. It enables companies to stay competitive by adapting to market trends, enhancing customer engagement, and continuously improving operational efficiency in an increasingly digital world.

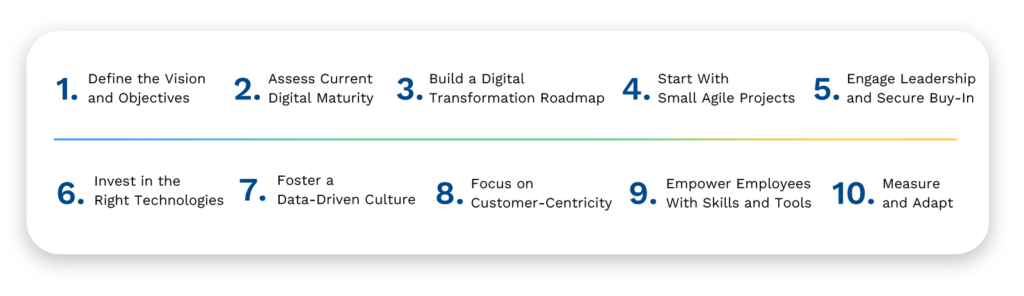

Steps to Creating a Digital Transformation Strategy for a Sample OT Facility

Now that you understand the essentials to getting started on your Digital Transformation journey, I’ll next explain the ideal architecture needed for digital transformation to occur. In the meantime, please reach out to me with your comments or to start a dialog.